The prevalence of false political news on platforms like Facebook and Twitter highlights the potential for social media to spread misinformation. This column uses an experiment during the 2022 US mid-term election campaign to assess the impact of various policies on the circulation of accurate and false news on X (formerly Twitter). Warning users about the prevalence of false news before they retweet a message is most effective at reducing the sharing of false news and increasing the sharing of true news, without diminishing overall user engagement. The authors also find that rapid algorithmic fact-checking, despite being error-prone, is more effective than accurate but expensive and slower professional fact-checking.

Social media has fundamentally altered the way people spend their time, communicate, and access information. A recent CEPR eBook (Campante et al. 2023) summarises cutting-edge research on the social and political ramifications of social media. Notably, social media's potential to spread misinformation is a key concern, as false political news is prevalent on platforms like Facebook, Twitter, and Reddit (Allcott and Gentzkow 2017, Vosoughi et al. 2018, Tufekci 2018, Haidt and Rose-Stockwell 2019, Persily and Tucker 2020a, 2020b). This is alarming because a substantial share of social media users rely on these platforms for political news, posing risks of misinformed decisions with severe consequences.

The public debate on the strategies to limit misinformation explores different policy options, including regulations of social media platforms such as the EU’s Digital Services Act (European Union 2023). However, regulatory measures must navigate the delicate balance between curbing misinformation and upholding free speech. In the US, constitutional limitations impede content moderation regulation, while the EU’s focus is on illegal content, which excludes a significant portion of political misinformation. Another major policy approach involves comprehensive digital literacy programmes to empower individuals to discern accurate information from false news (Guess et al. 2020). Social scientists also propose short-term practical and cost-effective interventions on social media platforms, such as confirmation clicks (Henry et al. 2022), fact-checking (Barrera et al. 2020, Henry et al. 2022, Nyhan 2020), and nudges prompting users to consider the consequences of sharing misinformation (Pennycook and Rand 2022). Recent evaluations of these short-term measures provide convincing and replicable evidence that, within specific contexts, each intervention effectively reduces the circulation of false information on social media.

In our recent paper (Guriev et al. 2023), we advance this literature by introducing a unified framework to assess the impact of various policies on the circulation of both accurate and false news. Unlike prior work relying on reduced-form analyses, our approach integrates experiments evaluating multiple policies simultaneously with a structural model of sharing decisions on social media. This enables us not only to compare the effectiveness of different policies, but also to analyse the mechanisms through which these policies operate.

During the 2022 US mid-term election campaign, we conducted a randomised controlled experiment involving 3,501 American Twitter users to closely emulate a real sharing experience on the platform. Within a survey environment, participants were presented with four political information tweets – two containing misinformation and two with accurate facts. The tweets were accompanied by screenshots, and participants were provided with direct links to access the tweets on Twitter. After seeing these tweets, random subgroups of participants underwent treatments simulating policy measures aimed at curbing the spread of false news. Subsequently, participants were asked if they would share one of these tweets on their Twitter accounts. Those who agreed were directed to Twitter, where they could confirm the retweet of their chosen tweet. We also collected information on participants' perceptions of tweet characteristics: they were asked to evaluate the accuracy and partisan leaning of each tweet.

There were five treatment groups. Participants in the first, our control group labelled ‘No policy’, proceeded directly to the sharing decision without receiving any treatment. The second group underwent the ‘Extra click’ treatment, introducing a slightly more cumbersome sharing process with an additional confirmation click. The third group was subjected to the ‘Prime fake news circulation’ treatment, where, before sharing, participants received a warning message inspired by nudges discussed in Pennycook and Rand (2022): "Please think carefully before you retweet. Remember that there is a significant amount of false news circulating on social media." The fourth treatment, ‘Offer fact check’, informed participants that the two false tweets had been fact-checked by PolitiFact.com, a reputable fact-checking NGO. They were provided with a link to access the fact-checking of these tweets. In the last treatment, ‘Ask to assess tweets’, participants were prompted to evaluate the accuracy and partisan leaning of the four tweets before sharing, introducing considerations about accuracy and potential partisan biases associated with the content. Everybody else did these evaluations after sharing.

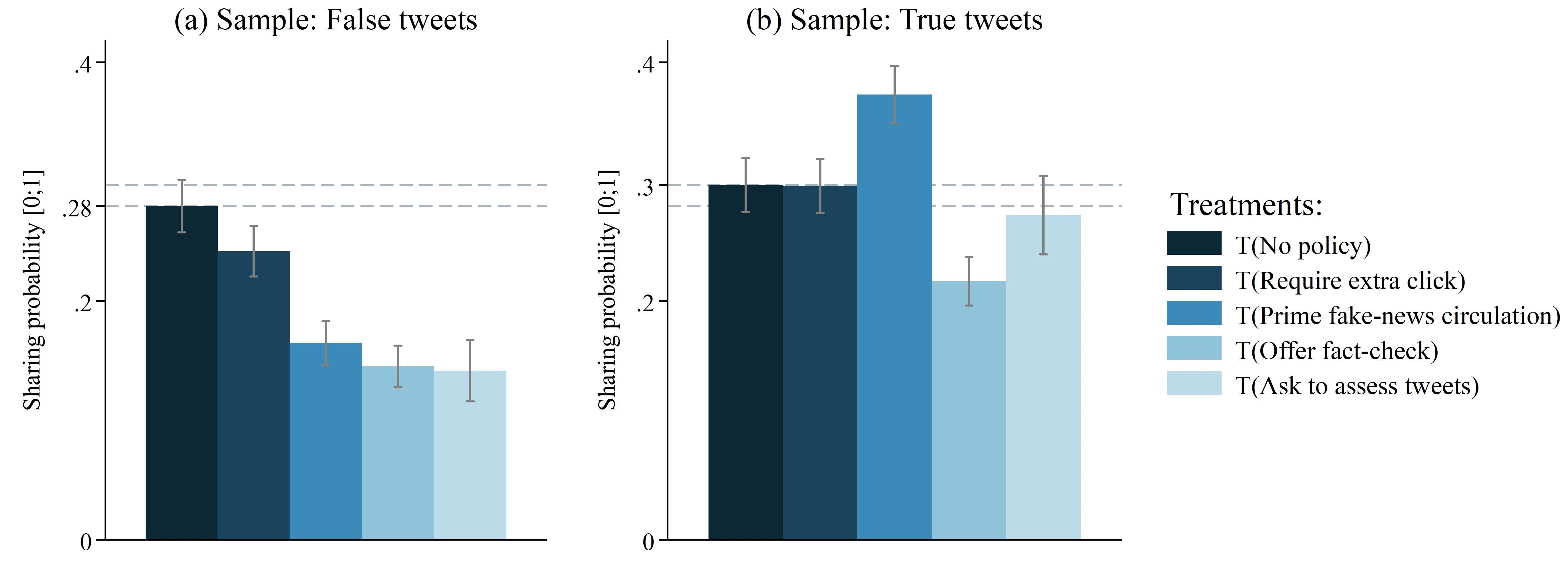

Figure 1 illustrates the reduced-form effects of the treatments on sharing true and false news. In the group with no policy intervention, 28% of participants shared one of the false tweets, while 30% shared one of the true tweets. Consistent with findings in prior research, all treatments led to a reduction in the sharing of false tweets. Specifically, the sharing rates of false news in (1) the extra click treatment, (2) the priming fake news circulation treatment, (3) the offering fact-check treatment, and (4) the treatment that prompts participants to assess content before sharing were 3.6, 11.5, 13.6, and 14.1 percentage points lower than in the no policy group, respectively.

Figure 1 Average treatment effects on sharing for false and true tweets

Source: Guriev et al. (2023).

However, the treatments have very different impacts on the sharing of true tweets. Requiring an extra click and prompting participants to assess tweets before sharing show no discernible effect. Offering a fact-check decreases the sharing of true tweets by 7.8 percentage points compared to the no policy group. In contrast, the priming treatment boosts the average sharing rate of true tweets by 8.1 percentage points. These findings establish a clear hierarchy of policy effectiveness in enhancing the accuracy of shared political content. The priming fake news circulation treatment emerges as the most potent strategy, fostering the ‘sharing discernment’ advocated by Pennycook and Rand (2022).

To understand the underlying mechanisms behind the differential effects of treatments on the sharing of true and false news, we construct and structurally estimate a model of sharing political information on social media. In this model, the sender evaluates the costs and benefits of sharing. The costs encompass the effort involved in sharing, such as the number of clicks required or the mental energy spent processing fact-checking information. The benefits of sharing are driven by three distinct motives: political persuasion of content’s audience, signalling own partisan affiliation, and benefits of maintaining reputation as a credible and trustworthy source.

We show that the decision to share a specific piece of news increases with the perceived veracity and the perceived partisan alignment of the news. Veracity positively influences the utility of sharing because of the reputation motive – well-informed receivers are more likely to view the sender as credible. Both partisan motives – persuasion and signalling – indicate that the sender gains a higher payoff when sharing news aligned with their views. The sharing decision also depends on the interaction between veracity and alignment. The direction of this effect depends on which partisan motive predominates. Persuasion is stronger when the news is more likely to be true, as receivers are inclined to adjust their actions accordingly. Conversely, signalling partisanship is stronger when the news is likely to be false, as a likely-false partisan message conveys a more credible signal of partisanship than a true partisan message. The results of the structural estimation of the model using experimental data show that the reputation motive is a crucial driver of sharing, while partisan motives also play a role. Moreover, partisan persuasion dominates signalling.

This analysis helps us understand the mechanisms through which various policy interventions affect sharing. Overall, anti-misinformation policies can influence sharing through three channels, which we refer to as (1) updating, (2) salience, and (3) cost of sharing. The updating channel operates through treatments potentially prompting the sender to revise their beliefs about the content’s veracity and partisan alignment. For example, fact-checking aims to alter users’ perceptions of content accuracy. The salience channel works through treatments altering the relative salience of reputation concerns compared to the partisan motives – so that the participants put a larger weight on the veracity of the news when deciding whether to share. The priming nudges, for instance, are designed to affect salience. Finally, each treatment affects the cost of sharing. For instance, adding an extra confirmation click increases the sharing cost in terms of the number of clicks for all types of content, whether true or false.

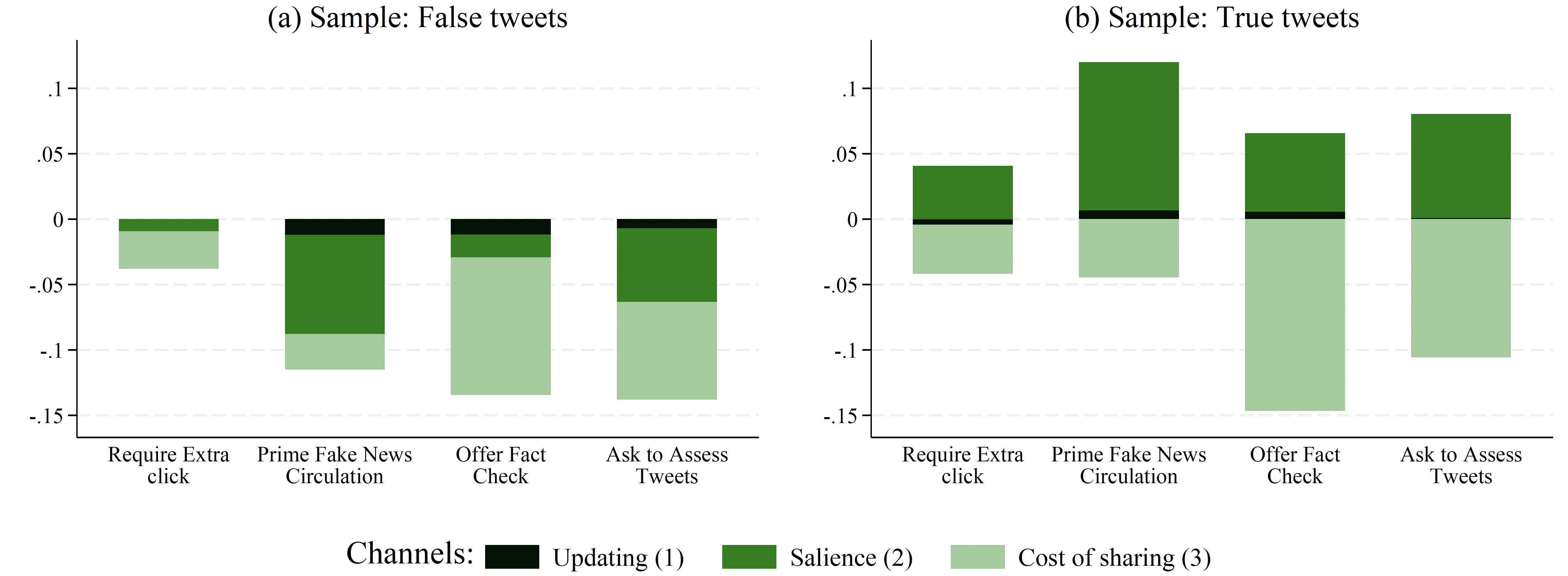

Figure 2 presents the decomposition of the effects of various treatments into these three channels. Surprisingly, the updating channel contributes minimally to the impact of treatments, despite some treatments affecting the sender's estimates of news veracity and partisan alignment. Instead, the overall effect of each treatment comes from the combination of how they influence the salience of reputation and the cost of sharing. Specifically, the salience channel drives the difference in treatment effects on sharing false and true news. Raising the salience of reputation positively impacts sharing of true news and adversely affects sharing of false news. All treatments, to varying degrees, increase the salience of reputation, with priming fake news circulation having the most substantial effect. Simultaneously, the costs of frictions associated with different treatments reduce sharing for both true and false news. Notably, the additional costs in the priming treatment are considerably lower than in the fact-checking treatment, making priming more effective in increasing the sharing of true news. This analysis explains why the priming treatment emerges as the most cost-effective policy.

Figure 2 Decomposition of the treatment effects into the three channels

Source: Guriev et al. (2023).

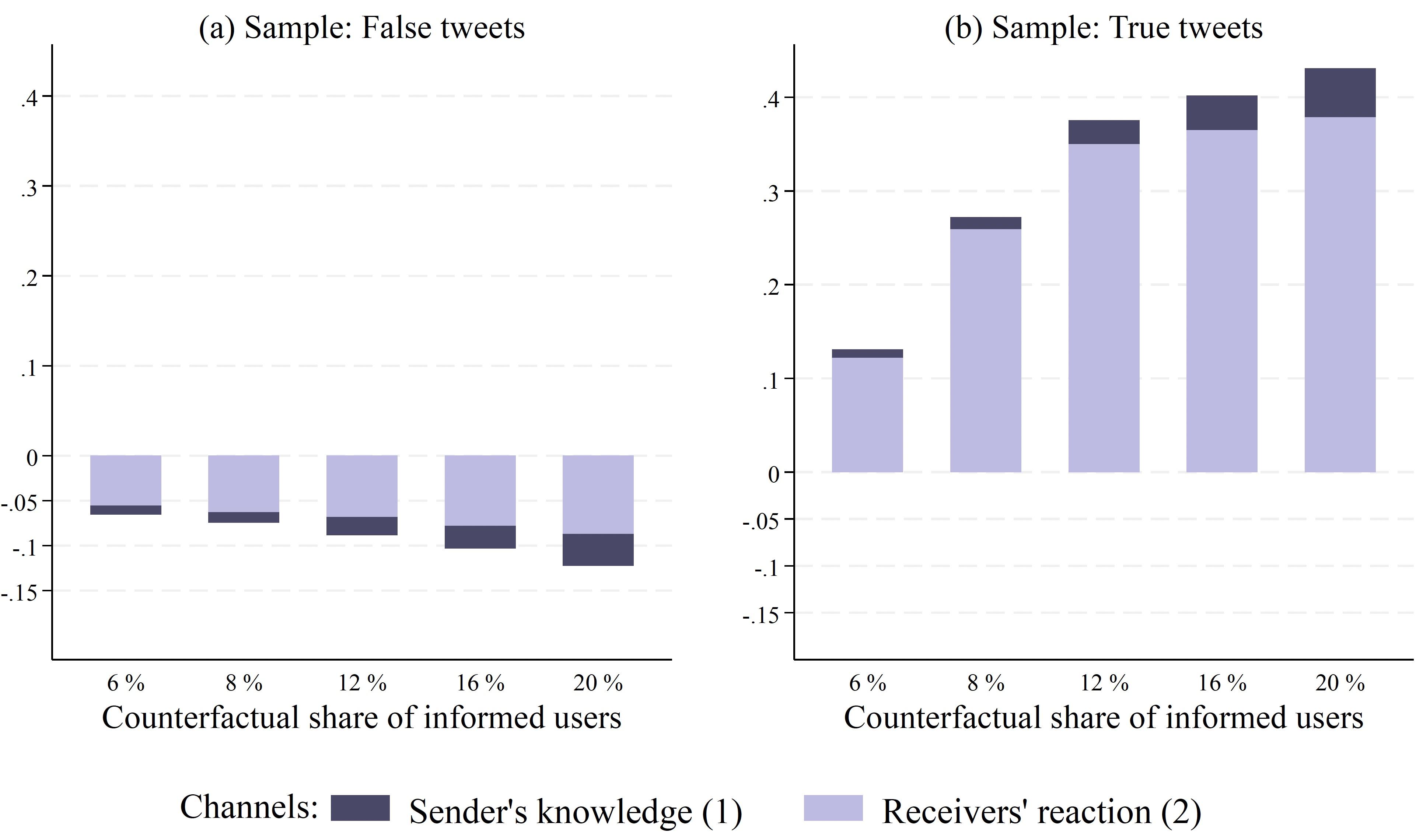

We also conducted a counterfactual analysis to assess the impact of digital literacy training on sharing behaviour. We model digital literacy training as an increase in the proportion of users capable of perfectly distinguishing true from false news. Only 4% of experiment participants were able to do this. Figure 3 shows the simulated results of the digital literacy training. It illustrates that an increase in digital literacy has a robust positive effect on the sharing of true news and a relatively modest negative impact on sharing false tweets. Increasing the share of informed individuals by 50% (from 4% to 6%) results in a 13.1 percentage point increase in the sharing of true tweets and a 6.6 percentage point decrease in the sharing of false tweets.

Figure 3 The effect of the change in digital literacy relative to the actual level (4%)

Source: Guriev et al. (2023).

This effect can be dissected into two channels: sender's knowledge and receivers' reactions. The sender's knowledge channel indicates that informed senders, whose share increases with digital literacy training, are less inclined to share false news. The receivers' reactions channel operates through the sender's expectation that receivers will react differently to news shared by them because informed receivers are more likely. The overall effect of digital literacy training is predominantly driven by this second, indirect channel of receivers' reactions. Senders are less likely to share false news due to digital literacy training primarily because they anticipate that better-informed receivers would resist persuasion, negatively updating their view of the sender's knowledge if false news is shared.

Our analysis carries significant policy implications. First, it underscores that the most effective short-term policy involves priming fake news circulation, as advocated by Pennycook and Rand (2022). This approach reduces the sharing of false news and increases the sharing of true news without diminishing overall social media user engagement. Second, we demonstrate that fact-checking primarily operates through the salience mechanism rather than updating. Consequently, accurate yet expensive fact-checking by professional fact-checkers is less effective compared to a faster, more cost-efficient – but more error-prone – algorithmic fact-checking. In this approach, users are promptly informed that the content is flagged as suspicious by the algorithm, prompting a heightened concern for veracity. Third, we highlight that digital literacy training is not only effective but also complements cheaper and more easily implementable short-term policies, especially the priming of fake news circulation, due to the mechanism through which it operates.

References

Allcott, H and M Gentzkow (2017), “Social Media and Fake News in the 2016 Election,” Journal of Economic Perspectives 31(2): 211–36.

Barrera, O, S Guriev, E Henry, and E Zhuravskaya (2020), “Facts, alternative facts, and fact checking in times of post-truth politics,” Journal of Public Economics 182: 104–123.

Campante, F, R Durante and A Tesei (eds) (2023), The Political Economy of Social Media, CEPR Press.

European Union (2023), “Digital Services Act: Application of the Risk Management Framework to Russian disinformation campaigns”.

Guess, A M, M Lerner, B Lyons, J M Montgomery, B Nyhan, J Reifler, and N Sircar (2020), “A digital media literacy intervention increases discernment between mainstream and false news in the United States and India,” Proceedings of the National Academy of Sciences of the United States of America 117(27): 15536-15545.

Guriev, S, E Henry, T Marquis, and E Zhuravskaya (2023), “Curtailing False News, Amplifying Truth,” CEPR Discussion Paper No. 18650.

Haidt, J and T Rose-Stockwell (2019), “The Dark Psychology of Social Networks: Why it feels like everything is going haywire,” The Atlantic, December.

Henry, E, E Zhuravskaya, and S Guriev (2022), “Checking and Sharing Alt-Facts,” American Economic Journal: Economic Policy 14(3): 55–86.

Nyhan, B (2020), “Facts and Myths about Misperceptions,” Journal of Economic Perspectives 34(3): 220–236.

Pennycook, G and D Rand (2022), “Accuracy prompts are a replicable and generalizable approach for reducing the spread of misinformation,” Nature Communications 13(2333).

Persily, N and J A Tucker (2020), Social Media and Democracy, Cambridge University Press.

Persily, N and J A Tucker (eds.) (2020), Social media and democracy: the state of the field, prospects for reform, Cambridge University Press.

Tufekci, Z (2018), “How social media took us from Tahrir Square to Donald Trump,” MIT Technology Review.

Vosoughi, S, D Roy, and S Aral (2018), “The spread of true and false information online,” Science 359: 1146–1151.